0) 준비

Local Clone· dev Branch 생성 · Rulset Setting

# 원하는 작업 디렉토리에서

git clone <https://github.com/daeun-ops/hybrid-mlops-demo.git>

cd hybrid-mlops-demo

# 원격 브랜치 확인

git branch -r

# main 최신화 & 로컬 dev 브랜치 생성

git checkout main

git pull origin main

git checkout -b dev

git push -u origin dev

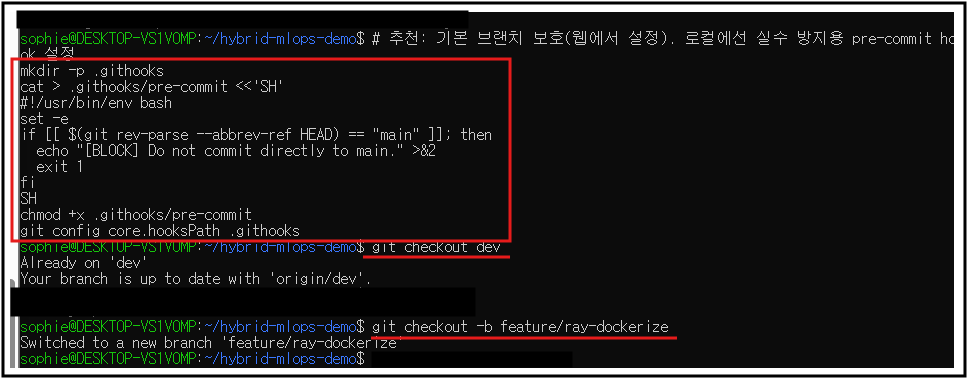

# 추천: 기본 브랜치 보호(웹에서 설정). 로컬에선 실수 방지용 pre-commit hook 설정

mkdir -p .githooks

cat > .githooks/pre-commit <<'SH'

#!/usr/bin/env bash

set -e

if [[ $(git rev-parse --abbrev-ref HEAD) == "main" ]]; then

echo "[BLOCK] Do not commit directly to main." >&2

exit 1

fi

SH

chmod +x .githooks/pre-commit

git config core.hooksPath .githooks

Merge 전략

- 작업: feature/* 브랜치에서 진행 → PR 대상은 dev

- 테스트/검증 OK → dev에만 머지

- main은 실제 배포 시점에만 (나중에) PR 생성.

git checkout dev

git pull

git checkout -b feature/ray-dockerize

1) feat(ray) Branch

1-1. 파일 추가 (vim/vi 사용 주의...)

- vim 입력: i → 아래 전체 붙여넣기 → 저장 Esc → :wq ( 이 정돈 다들 알겠지.... 그래도 혹시 모르니까..)

ray_inference/serve_app.py

mkdir -p ray_inference

vim ray_inference/serve_app.py

from fastapi import FastAPI, Response

from ray import serve

from prometheus_client import Counter, Histogram, generate_latest, CONTENT_TYPE_LATEST

import torch, time

app = FastAPI()

serve.start(detached=True)

REQ_TOTAL = Counter("inference_requests_total", "Total inference requests")

REQ_LAT = Histogram("inference_request_latency_seconds", "Inference latency (s)")

@app.get("/metrics")

def metrics():

return Response(generate_latest(), media_type=CONTENT_TYPE_LATEST)

@serve.deployment(route_prefix="/inference")

@serve.ingress(app)

class InferenceService:

def __init__(self):

self.device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"[INFO] Using device: {self.device}")

self.model = torch.nn.Linear(4, 2).to(self.device)

self.model.eval()

@app.post("/")

def infer(self, payload: dict):

REQ_TOTAL.inc()

start = time.time()

x = payload.get("input", [1.0, 2.0, 3.0, 4.0])

x = torch.tensor(x, dtype=torch.float32, device=self.device)

with torch.no_grad():

y = self.model(x)

REQ_LAT.observe(time.time() - start)

return {"device": self.device, "output": y.tolist()}

# 간단 헬스체크

@app.get("/healthz")

def healthz():

return {"ok": True}

InferenceService.deploy()

ray_inference/Dockerfile (CUDA 11.6: 현재 저의 랩탑 Driver와 호환)

vim ray_inference/Dockerfile

FROM nvidia/cuda:11.6.2-base-ubuntu20.04

ENV DEBIAN_FRONTEND=noninteractive \\

PYTHONDONTWRITEBYTECODE=1 \\

PYTHONUNBUFFERED=1

RUN apt-get update && apt-get install -y --no-install-recommends \\

python3 python3-pip ca-certificates curl && \\

ln -sf /usr/bin/python3 /usr/bin/python && \\

pip3 install --no-cache-dir --upgrade pip && \\

rm -rf /var/lib/apt/lists/*

RUN pip3 install --no-cache-dir "ray[serve]==2.9.3" fastapi uvicorn prometheus-client \\

&& pip3 install --no-cache-dir torch==1.13.1+cu116 -f <https://download.pytorch.org/whl/torch_stable.html>

WORKDIR /app

COPY serve_app.py /app/serve_app.py

EXPOSE 8000

CMD ["python", "serve_app.py"]

루트 docker-compose.yml

vim docker-compose.yml

version: "3.8"

services:

ray-inference:

build:

context: ./ray_inference

image: daeun/ray-inference:cu116

container_name: ray-inference

ports:

- "8000:8000"

deploy:

resources:

reservations:

devices:

- capabilities: ["gpu"]

environment:

- RAY_memory_monitor_refresh_ms=0

restart: unless-stopped

IDE가 있으신 분들은 IDE를 통해 작업해주시면 됩니다.

저는 모델 서빙하는 PipeLine을 구축하는 프로젝트를 하는 것이라서

IDE가 제 컴퓨터 메모리를 잡아먹는게 너무 싫어서 VIM 으로 작업합니다.

K8s 때문에 VIM 이 더 익숙하기도 합니다. . . . . . . .

1-2. WSL Test

docker compose build ray-inference

docker compose up -d ray-inference

# 기능 확인

curl -s <http://127.0.0.1:8000/healthz>

curl -s -X POST <http://127.0.0.1:8000/inference> \\

-H 'Content-Type: application/json' -d '{"input":[10,20,30,40]}'

curl -s <http://127.0.0.1:8000/metrics> | head

1-3. Commit & Push & PR

git add ray_inference/serve_app.py ray_inference/Dockerfile docker-compose.yml

git commit -m "feat(ray): containerize Ray Serve (CUDA 11.6) + /metrics + /healthz"

git push -u origin feature/ray-dockerize

https://github.com/daeun-ops/hybrid-mlops-demo

GitHub - daeun-ops/hybrid-mlops-demo

Contribute to daeun-ops/hybrid-mlops-demo development by creating an account on GitHub.

github.com

'DevOps' 카테고리의 다른 글

| [MLOps] Hybrid Demo : GPU Inference → Metrics → Grafana Dashboard 실시간 연결하기 (0) | 2025.10.31 |

|---|---|

| [MLOps] hybird-mlops-demo 실행 순서 feat. 자꾸 까먹어서 내가보려고 작성한 글 (0) | 2025.10.28 |

| [MlOps]Observability 맛보기: FastAPI·Ray Log를 Local에서 살펴보기 (0) | 2025.10.26 |

| [MlOps] Airflow 학습 DAG부터 Ray Serve 추론, Minikube까지 (0) | 2025.10.26 |

| [MlOps] Local MLOps 실습 환경 구축 (Airflow + MLflow) (0) | 2025.10.26 |